Advertisement

Formerly known as Anthropic, XAI has recently unveiled Grok-3, a large language model (LLM) designed to be more valuable and secure than earlier AI systems. As a next-generation conversational AI, Grok-3 powers the chatbot experience in products, including the newest Bing and other AI applications. Still, Grok-3 has highlighted the ongoing issues with openness and transparency in AI development, even as the AI field continues to advance.

Grok-3 shows how businesses are weighing ethical guardrails against the capabilities of sophisticated artificial intelligence. XAI's method of revealing model specifics, training data openness, and usage guidelines has sparked discussions about how truly open such AI systems are despite their technical advances. This article examines these issues and their broader implications.

Generally speaking, openness in artificial intelligence refers to researchers having easy access to understandable and publicly available models, data, and training methods. Though more powerful and safe than earlier versions, Grok-3 is not entirely open-source. Citing safety and competitive reasons, XAI has disclosed little about the data utilized for training and internal design.

This limited openness causes problems for independent auditing and reproducibility. Without total transparency, scientists cannot thoroughly investigate Grok-3's claims on fairness, bias protection, or safety elements. Opponents argue that such secrecy damages trust and impedes progress toward reducing the possible risks of artificial intelligence, including false results or bias.

Transparency is what it is: a conscience, an openness, but more importantly, bringing the possible understanding and explanation into the AI decision and output. Grok-3 affects millions by becoming the backbone of several favorite applications, such as chatbots. Transparency is a necessity with it, as it helps spot biases, unintended behavior, and even security risks.

While efforts have been made to build Grok-3's safety features to prevent the generation of harmful output, how users or regulators are supposed to verify such claims remains a puzzle without clear documentation and access. Transparency fosters accountability; if it has been possible for an AI to produce contentious output, understanding how that happened may help prevent the same issue from occurring again and may also improve the design.

XAI views the responsible development of AI as very significant, and thus, it is taking steps to improve transparency about Grok-3. Some of the safety research is shared, usage guidelines are published, and the company collaborates with external partners to monitor the model's behavior. They are also in discussions with policymakers to assist in the development of AI regulations.

However, XAI is prudent enough to weigh the pros and cons of maintaining transparency versus potential misuse. An all-out model release would make it all too easy for bad actors with a history of harmful activities or exploiting the vulnerable. This delicate balancing act between transparency and safety marks the entire range of issues AI companies face worldwide.

API access enables controlled transparency, allowing selected third parties to evaluate Grok-3 under strictly monitored conditions

The debate over Grok-3's openness and honesty raises pertinent ethical issues for the future control of artificial intelligence applications. Should an organization give transparency top priority to promote innovation and enable independent oversight in case of possible misconduct?

The model's obscurity raises issues about equity and inclusiveness as well. Marginalized communities suffer more from hidden biases or mistakes in AI results when we lack total visibility. Moreover, transparency and responsibility are crucial for maintaining public confidence in artificial intelligence; without these, we risk polarizing or eliciting an adverse reaction.

This discussion in AI governance highlights the need for structures that balance public interest with innovation

Although Microsoft's ambitious World AI model will revolutionize the game creation landscape, it also presents challenges. Including advanced artificial intelligence in various gaming settings requires striking a balance between realism and performance. Furthermore, considerable attention is paid to resolving issues related to data privacy, ethical AI practices, and avoiding unintended biases. Microsoft also has to negotiate hardware restrictions and motivate developers to adopt this approach so that it may realize its maximum potential on several platforms.

The Grok-3 XAI has taken a commendable leap forward in the few months since conception, even while posing the challenges that continue to haunt the quest for ethical AI. It enhances AI capability and ensures safe functioning, highlighting the struggle for openness in that space. For all its apparent commitment to transparency, the model's actions reveal the difficult choices AI developers must make between innovation, safety, and public accountability.

It will take teamwork among the private sector, researchers, regulators, and the general public to move forward in a responsible approach to AI. Grok-3 is a case in point to show why transparency is of the essence—not just technically, but philosophically, as a ground on which AI ethics for everyone else stands.

Advertisement

What happens when transformer models run faster right in your browser? Transformers.js v3 now supports WebGPU, vision models, and simpler APIs for real ML use

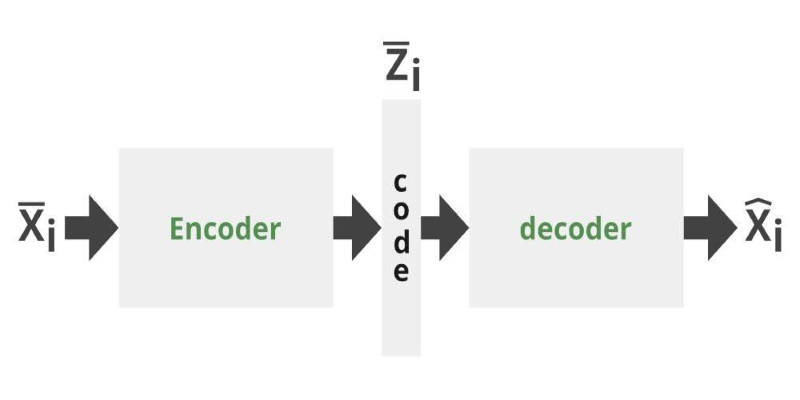

Can you get the best of both GANs and autoencoders? Adversarial Autoencoders combine structure and realism to compress, generate, and learn more effectively

Discover 10 job types AI might replace by 2025. Explore risks, trends, and how to adapt in this complete workforce guide.

XAI’s Grok-3 AI model spotlights key issues of openness and transparency in AI, emphasizing ethical and societal impacts

Want to use ChatGPT without a subscription? These eight easy options—like OpenAI’s free tier, Bing Chat, and Poe—let you access powerful AI tools without paying anything

How the Hugging Face embedding container simplifies text embedding tasks on Amazon SageMaker. Boost efficiency with fast, scalable, and easy-to-deploy NLP models

How Python Tuple Methods and Operations work with real code examples. This guide explains tuple behavior, syntax, and use cases for clean, effective Python programming

How to convert Python dictionary to JSON using practical methods including json.dumps(), custom encoders, and third-party libraries. Simple and reliable techniques for everyday coding tasks

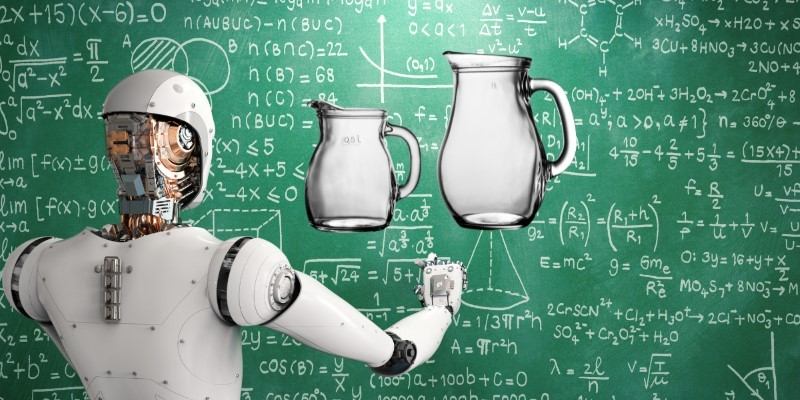

The Water Jug Problem is a classic test of logic and planning in AI. Learn how machines solve it, why it matters, and what it teaches about decision-making and search algorithms

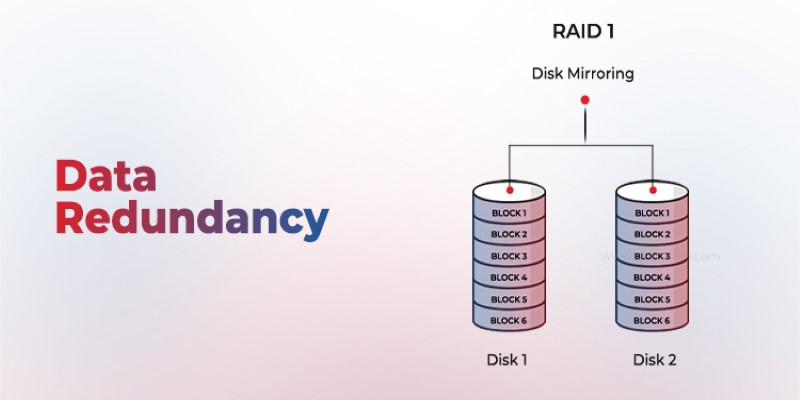

Is your system storing the same data more than once? Data redundancy can protect or complicate depending on how it's handled—learn when it helps and when it hurts

See how Intelligent Process Automation helps businesses automate tasks, reduce errors, and enhance customer service.

AI agents aren't just following commands—they're making decisions, learning from outcomes, and changing how work gets done across industries. Here's what that means for the future