Advertisement

You're working with a Python dictionary, and you need to turn it into JSON. Maybe it's for a web app, maybe you're saving settings, or maybe you're sending data to an API. Either way, this conversion is a routine part of programming. It sounds simple, and most of the time, it is. However, Python provides several ways to handle it, each with its purpose. Knowing these options helps you write cleaner, faster, and more predictable code. You don't need to overthink it. Just understand what each method does and when it makes sense to use it.

This is the most used approach. Python has the json module, and dumps() is the first-line function to use for transforming a Python dictionary into a JSON-formatted string.

import json

data = {'name': 'John', 'age': 30, 'city': 'New York'}

json_string = json.dumps(data)

print(json_string)

This returns:

{"name": "John", "age": 30, "city": "New York"}

It is a simple string, no longer a file or dictionary. It appears to be JSON and acts like a string. You can either pass it across a network or output it to a file.

If you're saving the dictionary to a file, use json.dump() instead of dumps(). It writes JSON directly into a file object.

import json

data = {'name': 'Alice', 'age': 25}

with open('data.json', 'w') as f:

json.dump(data, f)

This creates a file named data.json with content like:

{"name": "Alice", "age": 25}

The primary difference between dump() and dumps() is that dump() writes output to a file, whereas dumps() returns a string.

Sometimes, you want the output to be readable, especially during debugging. json.dumps() can format the string using the indent and sort_keys arguments.

import json

data = {'z': 1, 'a': 2, 'b': 3}

json_string = json.dumps(data, indent=4, sort_keys=True)

print(json_string)

Output:

{

"a": 2,

"b": 3,

"z": 1

}

Adding indentation helps humans read the structure more easily. sort_keys=True organizes the output alphabetically.

Python dictionaries can contain data types that JSON doesn’t support, like datetime objects. If you try to convert them directly, you’ll get an error. The default argument helps by telling Python how to handle such types.

import json

from datetime import datetime

def converter(obj):

if isinstance(obj, datetime):

return obj.isoformat()

data = {'event': 'login', 'time': datetime.now()}

json_string = json.dumps(data, default=converter)

print(json_string)

Now the datetime object is safely converted to a string. You can customize converter() to handle other types as well.

This is a more advanced way to customize how objects are serialized. You create a custom encoder by subclassing json.JSONEncoder.

import json

from datetime import datetime

class CustomEncoder(json.JSONEncoder):

def default(self, obj):

if isinstance(obj, datetime):

return obj.strftime('%Y-%m-%d %H:%M:%S')

return super().default(obj)

data = {'timestamp': datetime.now()}

json_string = json.dumps(data, cls=CustomEncoder)

print(json_string)

This lets you reuse the encoder across different parts of your code, which can be cleaner if you have many custom types.

If speed is important and you’re working with a large dataset, the orjson library can be a better option. It’s a third-party package known for its speed and efficiency.

import orjson

data = {'x': 10, 'y': 20}

json_bytes = orjson.dumps(data)

json_string = json_bytes.decode('utf-8')

print(json_string)

Note that orjson.dumps() returns bytes, so you need to decode it. It’s fast, compact, and works well with complex structures, but it doesn’t support some of the customization that the json module offers.

Install it with:

pip install orjson

Another fast third-party alternative is ujson (UltraJSON). It's simpler than orjson and works almost like Python's built-in json.

import ujson

data = {'name': 'Eva', 'age': 22}

json_string = ujson.dumps(data)

print(json_string)

It doesn’t support all features of the standard library (like default or cls), but for basic use, it’s fast and easy.

Install it with:

pip install ujson

If your data is in a pandas DataFrame, converting it to JSON is built in. Pandas offers flexible ways to serialize data, especially for structured tabular formats.

import pandas as pd

df = pd.DataFrame([

{'id': 1, 'name': 'John'},

{'id': 2, 'name': 'Jane'}

])

json_string = df.to_json(orient='records')

print(json_string)

Output:

[{"id":1,"name":"John"},{"id":2,"name":"Jane"}]

This is useful when you're moving data between systems or preparing data for APIs that expect JSON arrays.

When working with asynchronous Python code—especially in web servers or event-driven apps—writing JSON to a file without blocking the main thread can be helpful. With aiofiles, you can use json.dumps() alongside asynchronous file operations.

import json

import asyncio

import aiofiles

data = {'status': 'success', 'code': 200}

async def write_json():

json_string = json.dumps(data)

async with aiofiles.open('async_data.json', 'w') as f:

await f.write(json_string)

asyncio.run(write_json())

This avoids blocking and keeps your application responsive, especially during heavy I/O tasks.

Install aiofiles with:

pip install aiofiles

The built-in json module can’t handle custom classes or nested objects well without extra work. jsonpickle is designed for that—it converts almost any Python object into JSON, including user-defined classes.

import jsonpickle

class Person:

def __init__(self, name, age):

self.name = name

self.age = age

person = Person("Alice", 28)

json_string = jsonpickle.encode(person)

print(json_string)

This gives:

{"py/object": "__main__.Person", "name": "Alice", "age": 28}

You can later decode it back using jsonpickle.decode(). It’s great for situations where you don’t want to manually define how to serialize every object type.

Install it with:

pip install jsonpickle

You don’t need to memorize everything, but having a few reliable methods in your toolkit makes life easier. json.dumps() and json.dump() cover most everyday needs. For large or complex tasks, using orjson or ujson can give you a speed boost. If you’re dealing with custom objects, using default or a custom encoder works better. And if your data lives in a DataFrame, pandas does the job cleanly. Each option has a purpose, and the one you pick depends on what your program is doing. Once you get used to these, turning a Python dictionary into JSON becomes second nature.

Advertisement

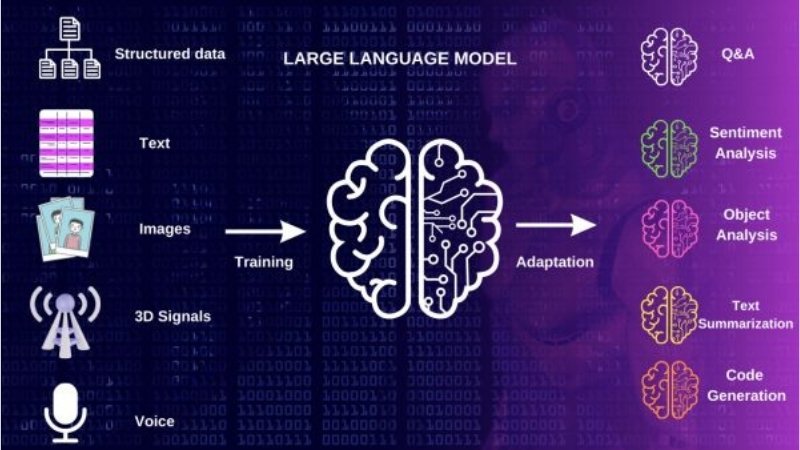

Multilingual LLM built with synthetic data to enhance AI language understanding and global communication

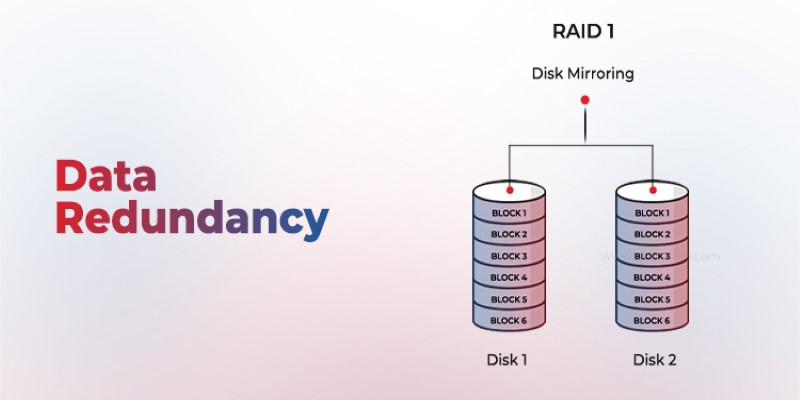

Is your system storing the same data more than once? Data redundancy can protect or complicate depending on how it's handled—learn when it helps and when it hurts

Want to use ChatGPT without a subscription? These eight easy options—like OpenAI’s free tier, Bing Chat, and Poe—let you access powerful AI tools without paying anything

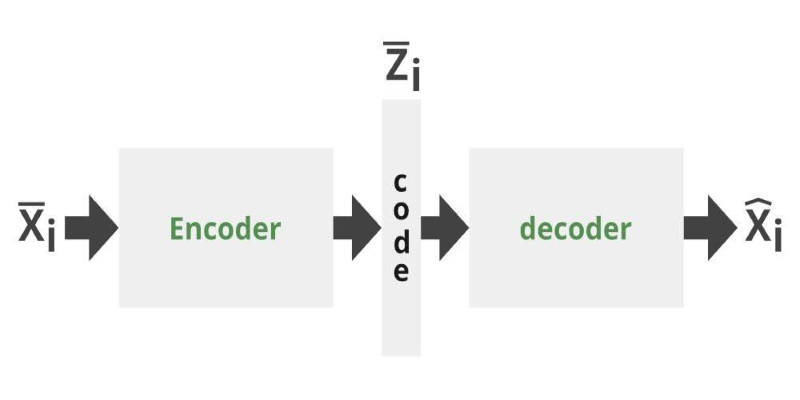

Can you get the best of both GANs and autoencoders? Adversarial Autoencoders combine structure and realism to compress, generate, and learn more effectively

Which data science startups are changing how industries use AI? These ten U.S.-based teams are solving hard problems with smart tools—and building real momentum

How Python Tuple Methods and Operations work with real code examples. This guide explains tuple behavior, syntax, and use cases for clean, effective Python programming

Curious about data science vs software engineer: which is a better career? Explore job roles, skills, salaries, and work culture to help choose the right path in 2025

See how Intelligent Process Automation helps businesses automate tasks, reduce errors, and enhance customer service.

The Water Jug Problem is a classic test of logic and planning in AI. Learn how machines solve it, why it matters, and what it teaches about decision-making and search algorithms

How switching from chunks to blocks is accelerating uploads and downloads on the Hub, reducing wait times and improving file transfer speeds for large-scale projects

How AI in mobiles is transforming smartphone features, from performance and personalization to privacy and future innovations, all in one detailed guide

How 7 popular apps are integrating GPT-4 to deliver smarter features. Learn how GPT-4 integration works and what it means for the future of app technology