Advertisement

Transformers.js has become the go-to library for running pre-trained models directly in the browser. With version 3, it brings an impressive set of updates, from WebGPU support to expanded model types and smarter task handling. Whether you're working on natural language processing, computer vision, or audio projects, this update makes things simpler, faster, and more versatile.

Let’s go through what’s new—and what it means for how we build in-browser machine learning tools today.

If you've been waiting for WebGPU support in Transformers.js, version 3 has made it official. WebGPU is the next-generation graphics and compute API designed for the web, offering better performance than WebGL and unlocking new potential for running ML models in the browser.

Before WebGPU, running transformer models in the browser usually relied on WebGL or just the CPU. It worked, but it had clear limits. WebGL isn’t really meant for machine learning, and CPU inference can be slow—especially for large models.

With WebGPU, things change:

Keep in mind that WebGPU is still rolling out across browsers. Right now, it's best supported in Chrome (with a flag) and partially available in Safari and Firefox. Still, for developers building future-facing applications, WebGPU is where the browser is headed—and Transformers.js is ready for it.

One of the biggest changes in v3 is the support for a broader range of models. You're not limited to just text classification or generation anymore. Transformers.js now accommodates more complex and multimodal use cases.

Vision models: Support now includes models like CLIP, SAM (Segment Anything Model), and image classification models. You can run them straight from the browser with no server involved.

Audio models: Whisper, Wav2Vec2, and others are part of the new lineup. That means speech-to-text in the browser without external APIs.

Multimodal models: Some models now combine text and image inputs, giving more flexibility for building creative tools like image captioning or visual question answering.

These additions aren’t just for experimentation. They’re fast enough to use in real applications, whether it’s an educational tool, a demo app, or even a browser extension that needs local inference.

In previous versions, each task felt somewhat siloed. You had to manually set things up depending on the model type. In v3, Transformers.js makes this more automatic.

You now use pipeline() to define the task, and the library figures out the model type, tokenizer, and pre/post-processing steps. The goal is to mirror Hugging Face’s Python API more closely, and for the most part, it works really well.

So instead of juggling different setups, you can just do something like:

js

CopyEdit

const pipe = await pipeline('image-classification');

const result = await pipe(imageElement);

It’s that simple.

Model sizes are always a concern when running inference in the browser. The team behind Transformers.js has added several updates to make this more manageable.

Previously, loading a model often meant pulling the entire file from Hugging Face’s hub—even if you didn’t need all of it right away. Now, Transformers.js supports lazy loading. This means that only the necessary parts of the model are loaded when you need them.

So, if you're only using the model for certain tasks or on small inputs, you don't pay the full cost upfront. This is especially helpful for larger models like Whisper or SAM.

Another win: better support for quantized models. This allows you to use smaller versions of models (for example, int8 versions) without having to retrain or compromise too much on performance. These quantized models load faster and use less memory—something that's critical when you're trying to deliver a good user experience directly in the browser.

While performance and model diversity get most of the spotlight, v3 also brings several improvements that just make life easier for developers.

The API is now more consistent across tasks. Whether you're doing text generation or image segmentation, the interface behaves similarly. That reduces confusion and helps you build quickly.

Instead of vague errors or cryptic stack traces, v3 introduces clearer messages. If your inputs are misformatted or a required dependency is missing, the library gives a more human-readable hint about what’s wrong.

Transformers.js used to require a good deal of manual work to get the input tensors right, especially for image and audio tasks. Now, preprocessing is more streamlined, with better defaults and built-in helpers. That means less boilerplate and fewer headaches.

For example, if you're using a CLIP model, it handles image resizing, normalization, and token pairing automatically. No need to guess what shape the input tensor should be—it just works.

Transformers.js v3 feels like a turning point. It’s not just about adding support for more models or tweaking performance. It’s about making in-browser machine learning practical, fast, and less of a hassle. With WebGPU bringing speed, new models bringing range, and API tweaks making it easier to use, the browser is now a place where real ML work can happen—no server needed.

If you’ve been waiting to try running complex ML tasks right in the browser, this is probably the version to start with. Hope you find this info worth reading. Stay tuned for more.

Advertisement

A step-by-step guide on how to use Midjourney AI for generating high-quality images through prompts on Discord. Covers setup, subscription, commands, and tips for better results

How to convert Python dictionary to JSON using practical methods including json.dumps(), custom encoders, and third-party libraries. Simple and reliable techniques for everyday coding tasks

Windows 12 introduces a new era of computing with AI built directly into the system. From smarter interfaces to on-device intelligence, see how Windows 12 is shaping the future of tech

Want to run AI models on your laptop without a GPU? GGML is a lightweight C library for efficient CPU inference with quantized models, enabling LLaMA, Mistral, and more to run on low-end devices

Discover 10 job types AI might replace by 2025. Explore risks, trends, and how to adapt in this complete workforce guide.

Want to use ChatGPT without a subscription? These eight easy options—like OpenAI’s free tier, Bing Chat, and Poe—let you access powerful AI tools without paying anything

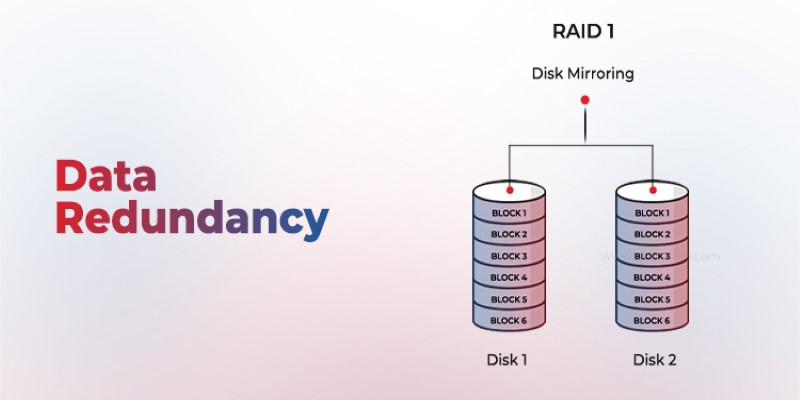

Is your system storing the same data more than once? Data redundancy can protect or complicate depending on how it's handled—learn when it helps and when it hurts

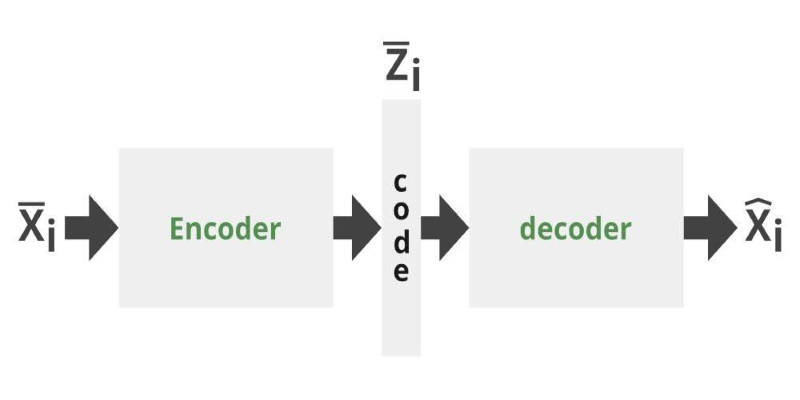

Can you get the best of both GANs and autoencoders? Adversarial Autoencoders combine structure and realism to compress, generate, and learn more effectively

What happens when transformer models run faster right in your browser? Transformers.js v3 now supports WebGPU, vision models, and simpler APIs for real ML use

See how Intelligent Process Automation helps businesses automate tasks, reduce errors, and enhance customer service.

GenAI is proving valuable across industries, but real-world use cases still expose persistent technical and ethical challenges

How 7 popular apps are integrating GPT-4 to deliver smarter features. Learn how GPT-4 integration works and what it means for the future of app technology