Advertisement

When working with digital systems, storing the same piece of information in more than one place is not uncommon. This is called data redundancy. It can happen by design or by accident, but either way, it means multiple copies of the same data are present across files, systems, or databases.

Sometimes, redundancy is helpful, especially when it comes to keeping data safe or accessible. Other times, it turns into a mess—slowing down systems, wasting storage, or creating confusion about which copy is the most accurate. That's why understanding how to manage redundancy makes a real difference.

In plain language, redundancy of data is duplicating the same data multiple times. Two departments in a business holding each department's version of the employee database with the same names, phone numbers, and addresses is an example of redundancy. It may occur between spreadsheets, cloud systems, or even within the same system.

This duplication can be intentional, such as for backup and recovery purposes, or unintentional due to poor design, miscommunication, or a lack of data integration. Both forms affect how systems perform and how reliable the data becomes over time.

One of the most common reasons to duplicate data is to create a backup. In case of system failure, cyberattack, or accidental deletion, having a copy stored somewhere else can save the day. Instead of losing everything, the system can be restored using the backup file.

In distributed systems, redundancy improves performance by storing copies of data closer to the user. Instead of pulling information from a remote server, users get faster access from a local or regional copy. This makes a real difference in systems that serve users across the globe.

Redundant data helps keep things running smoothly if one part of the system fails. Let’s say a server goes offline. If the same data is available on another server, operations continue without disruption. This sort of setup is especially useful in high-availability systems.

Companies that depend on continuous access to their data often rely on redundancy to reduce downtime. Whether it’s a financial platform, hospital records, or logistics software, redundancy ensures that everything keeps running—even when problems crop up in the background.

Storing the same thing over and over eats up space. Especially in systems where storage is limited or expensive, repeated data entries can become a silent cost that piles up over time.

If one copy of the data is updated and the others are not, things get messy. You end up with conflicting records—say, one customer database says the phone number is updated, while the other still lists the old one. Sorting out which is correct takes extra effort and can delay decision-making.

The more copies of data exist, the harder it becomes to manage. Updating every version manually isn’t realistic, especially in large systems. And if automation isn’t in place, even small changes become prone to error.

Searching through a database with a lot of redundant data slows things down. Systems have to process more information than necessary, and the same result might show up multiple times. For applications that depend on quick responses, that kind of delay is a deal-breaker.

Since redundancy can either help or hurt depending on how it's handled, knowing how to control it is key. Here are some strategies to manage it effectively.

One way to limit unnecessary duplication is by organizing the database through normalization. This process breaks data into smaller, more logical tables that reduce repetition. Each piece of information is stored just once and linked through relationships.

For example, instead of entering a customer’s address in every order they make, the system stores the address in one place and links each order back to it. That way, changing the address updates it across the system automatically.

Many organizations store data in multiple places—sales records in one system, support tickets in another, and inventory in a third. Integration tools help bring these sources together and reduce unnecessary duplication.

These tools check for similar entries and merge them or flag them for review. This leads to a cleaner, more organized system that’s easier to trust and manage.

Backups are a form of intentional redundancy, but they don’t have to slow down your system or take up too much space. A smart approach is to use incremental backups—where only the changes made since the last backup are stored—rather than copying everything each time.

Scheduling backups during off-peak hours, encrypting backup data, and storing them in secure, offsite locations all help reduce the impact of redundancy while keeping data safe.

Decide early on how data should be updated and by whom. If multiple departments have access to the same records, create rules that prevent duplicate entries or edits from causing conflicts.

For instance, setting up a master record that others can reference (but not edit) helps ensure that one version of the truth is maintained. Automated alerts for duplicate entries can also help staff catch issues before they spread.

Data redundancy is one of those things that can either support or sabotage a system, depending on how it's handled. When done with purpose—like in backups or distributed networks—it improves performance, protects against loss, and keeps systems running. But when left unchecked, it leads to inconsistency, waste, and slowdown.

The key is to recognize where redundancy is useful and where it's not. Using smart storage practices, reliable integration tools, and clear rules for updates can turn redundancy from a liability into a practical advantage. So before storing the same data twice, it helps to ask: is this a safeguard or just clutter?

Advertisement

Curious about data science vs software engineer: which is a better career? Explore job roles, skills, salaries, and work culture to help choose the right path in 2025

How AI in mobiles is transforming smartphone features, from performance and personalization to privacy and future innovations, all in one detailed guide

Discover 10 job types AI might replace by 2025. Explore risks, trends, and how to adapt in this complete workforce guide.

Want to use ChatGPT without a subscription? These eight easy options—like OpenAI’s free tier, Bing Chat, and Poe—let you access powerful AI tools without paying anything

XAI’s Grok-3 AI model spotlights key issues of openness and transparency in AI, emphasizing ethical and societal impacts

Want to run AI models on your laptop without a GPU? GGML is a lightweight C library for efficient CPU inference with quantized models, enabling LLaMA, Mistral, and more to run on low-end devices

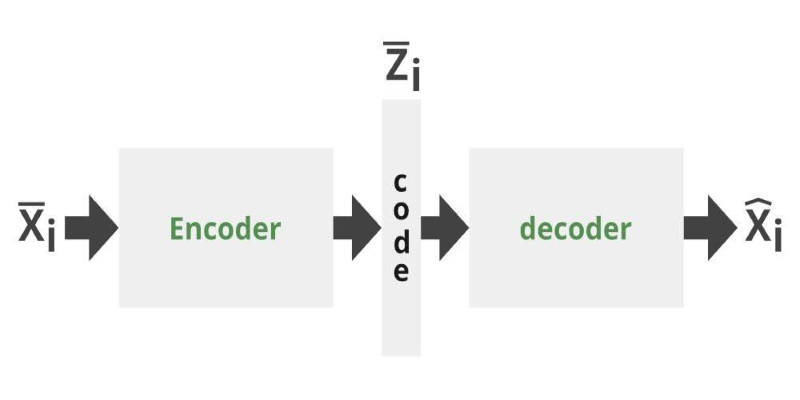

Can you get the best of both GANs and autoencoders? Adversarial Autoencoders combine structure and realism to compress, generate, and learn more effectively

How Remote VAEs for decoding with inference endpoints are shaping scalable AI architecture. Learn how this setup improves modularity, consistency, and deployment in modern applications

See how Intelligent Process Automation helps businesses automate tasks, reduce errors, and enhance customer service.

How switching from chunks to blocks is accelerating uploads and downloads on the Hub, reducing wait times and improving file transfer speeds for large-scale projects

How to convert Python dictionary to JSON using practical methods including json.dumps(), custom encoders, and third-party libraries. Simple and reliable techniques for everyday coding tasks

Curious about GPT-5? Here’s what to know about the expected launch date, key improvements, and what the next GPT model might bring