Advertisement

Over the past few months, GGML has been making waves among developers, especially those working with AI models on devices with limited memory. Whether it's getting large language models to run on a basic laptop or shrinking response times in smaller apps, GGML seems to come up more often than not. But what is it exactly?

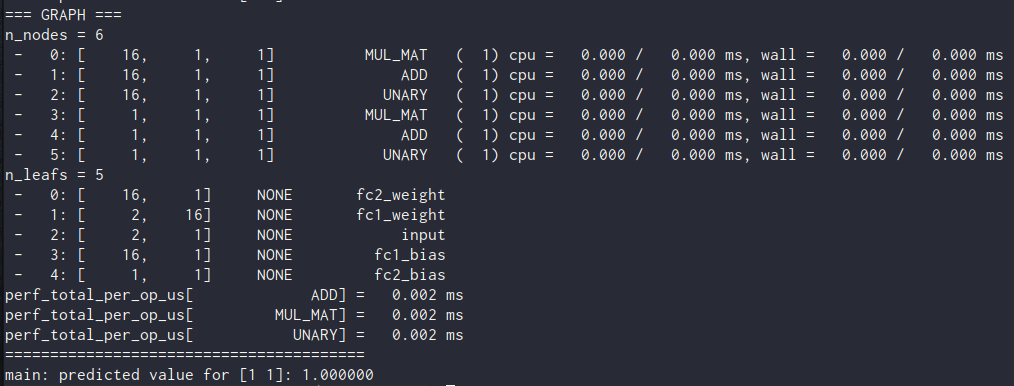

To put it simply, GGML is a tensor library designed to run machine learning models efficiently on the CPU. It doesn’t need a GPU, it doesn’t ask for a ton of memory, and it gets the job done with remarkable speed—especially when you’re using quantized models. Let’s take a closer look at what makes GGML tick, how it works, and what makes it different from other ML libraries.

GGML stands for “Georgi Gerganov Machine Learning," named after its creator. It's written in plain C, which lends it a no-frills appeal. No dependencies and no complicated setup. If you've ever wrestled with getting CUDA or TensorRT to behave, you’ll probably appreciate how low-key GGML feels by comparison.

It was built with one main idea in mind: run ML models without needing a high-end setup. This is especially useful for anyone trying to work locally, whether to save on cloud costs or simply for convenience.

A big part of what GGML does right is how it handles quantized models. These are reduced-size versions of large AI models, where the precision of the numbers is reduced—for example, from 32-bit to 4-bit. That might sound like a big downgrade, but the surprising part is that most models still perform well with minimal quality loss. And GGML knows how to make the most out of those smaller models.

Running large AI models typically requires a GPU, but GGML flips that idea on its head. It's built to take full advantage of modern CPU features—especially SIMD instructions like AVX and AVX2. These are the same instructions that help speed up video processing or audio editing software, and GGML taps into them to speed up ML tasks.

There’s no need to install massive driver packages or tinker with dependencies. The library keeps things tight, and that's a significant reason why it runs smoothly on various operating systems—Windows, macOS, Linux, and even Android and iOS if you want to go that far.

One thing that helps performance a lot is how GGML uses memory. It loads everything into RAM and keeps model weights there, too, which reduces the need for constant file access. This alone makes a difference, especially on machines with slower disks.

On top of that, the structure of the models is flattened and simplified for faster access. So, even if you're working with a scaled-down version of a transformer model, the actual inference is relatively fast and lightweight.

When you’re trying to run something like LLaMA 2 or Mistral on a regular machine, the full-size versions won’t work. You need smaller models—and that’s where quantization comes in. GGML supports multiple quantization formats like Q4_0, Q4_K, Q5_1, and so on, each offering different trade-offs between size and performance.

For example, if you're okay with a small drop in accuracy, Q4_0 might be perfect. If you need a little more precision and have a bit more memory to spare, you can go with Q5_1. GGML allows you to choose what works best for your setup and model.

One of the most common use cases is local chatbots. People build small, responsive LLM chat interfaces using quantized models and GGML as the backend. No server lag, no privacy concerns. Everything runs locally, and it runs fast enough for day-to-day use.

There’s also llama.cpp, which is one of the most widely used projects that runs on top of GGML. It’s a simple C++ program that loads LLaMA models in GGML format. It handles prompting and streaming responses and even supports interactive conversation loops. And it all fits in just a few lines of code—compared to the complexity of frameworks like PyTorch or TensorFlow, it’s a breath of fresh air.

If you’re curious about how to get started with GGML, here’s a straightforward way to do it. You won’t need fancy tools or complex environments.

Start by picking a project that’s built on GGML—like llama.cpp or mistral.cpp. These projects often have everything pre-configured. Clone the repository using:

bash

CopyEdit

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

Download a model that's already been converted to GGML format. This usually comes as a .bin file, and you'll need to get it from the developers of that specific model. Some communities or sites offer pre-quantized models; however, be sure to follow the license rules.

Most GGML-based projects come with a simple makefile. Just run:

go

CopyEdit

make

This compiles everything needed using your system's default compiler. On Windows, you may need to use CMake or install a toolchain like MSYS2; however, the process is overall straightforward.

Once the model is in place and the code is compiled, you can run inference like this:

bash

CopyEdit

./main -m models/your-model.bin -p "What is the capital of France?"

The model will load, and you'll see a response within seconds. From here, you can build scripts, bots, apps, or whatever else you need.

GGML stands out because it does the job without the usual overhead. It's lean, it's practical, and it's changing how people run AI models on local devices. You don't need a GPU, a cloud server, or a massive budget. If you've got a decent CPU and some memory to spare, that's enough.

It’s not about trying to replace high-end tools or frameworks. It’s about having a fast, simple option that just works. GGML offers that, and more people are starting to realize how useful it really is.

Advertisement

Multilingual LLM built with synthetic data to enhance AI language understanding and global communication

How the Hugging Face embedding container simplifies text embedding tasks on Amazon SageMaker. Boost efficiency with fast, scalable, and easy-to-deploy NLP models

Discover 10 job types AI might replace by 2025. Explore risks, trends, and how to adapt in this complete workforce guide.

Windows 12 introduces a new era of computing with AI built directly into the system. From smarter interfaces to on-device intelligence, see how Windows 12 is shaping the future of tech

How AI in mobiles is transforming smartphone features, from performance and personalization to privacy and future innovations, all in one detailed guide

How to use os.mkdir() in Python to create folders reliably. Understand its limitations, error handling, and how it differs from other directory creation methods

How to convert Python dictionary to JSON using practical methods including json.dumps(), custom encoders, and third-party libraries. Simple and reliable techniques for everyday coding tasks

AI agents aren't just following commands—they're making decisions, learning from outcomes, and changing how work gets done across industries. Here's what that means for the future

The Water Jug Problem is a classic test of logic and planning in AI. Learn how machines solve it, why it matters, and what it teaches about decision-making and search algorithms

Which data science startups are changing how industries use AI? These ten U.S.-based teams are solving hard problems with smart tools—and building real momentum

Explore the top 10 LLMs built in India that are shaping the future of AI in 2025. Discover how Indian large language models are bridging the language gap with homegrown solutions

A step-by-step guide on how to use Midjourney AI for generating high-quality images through prompts on Discord. Covers setup, subscription, commands, and tips for better results