Advertisement

For anyone working with large-scale data sharing, Speed is a constant concern. Whether you're uploading machine learning models, datasets, or applications to a collaborative hub or downloading them to use locally, sluggish transfer speeds can slow down progress and burn time. That's where smarter data handling methods come into play.

The traditional method—splitting files into chunks—has been reliable but comes with performance trade-offs. A shift is happening from this old method to a more efficient one based on "blocks." This change quietly improves file transfer speeds for developers and researchers.

File uploads and downloads on platforms like the Hub typically involve breaking large files into smaller parts to make transmission manageable. This is known as chunking. While it helps with resumable uploads and fault tolerance, it wasn't originally designed with scale and concurrency in mind. With more users working with multi-gigabyte models and data, the need to speed up these transfers has grown. Chunk-based systems can hit a ceiling, especially when network congestion or files contain many tiny differences.

The move to a block-based system introduces a more refined way of slicing and identifying file content. Rather than relying on static-sized chunks, blocks are determined by content patterns. This makes them more flexible and, in many cases, significantly reduces the total data that needs to be moved during uploads or downloads. In practice, if only part of a file has changed, only that changed block gets transmitted. The rest, already stored on the Hub or your device, doesn’t get re-sent or re-downloaded.

This system also supports block deduplication, eliminating repeated transfer of identical blocks across different files or versions. For example, if you're uploading a new model that shares a lot of structure with an older one, the shared parts won't be sent again. It may sound like a small change, but it can make a huge difference when working at scale.

Switching to blocks isn't just a matter of slicing files differently. It requires a deeper change in how data is processed, hashed, stored, and retrieved. With blocks, each file segment is hashed using content-defined boundaries rather than fixed sizes. This makes the detection of similar or unchanged data far more accurate. It also means smaller modifications—like updating just a few lines in a code file—no longer require re-uploading or downloading the whole file or even an entire chunk.

The system keeps a reference to these blocks, allowing the platform to assemble files on demand. So, instead of treating each upload or download as a standalone event, it treats it as a smart sync. You're only moving what's necessary; everything else comes from pre-existing, cached content.

This approach has other benefits, too. It leads to smaller metadata files, faster indexing, and lower server load. When thousands of people interact with large files simultaneously, every bit of saved bandwidth and processing time counts.

The new method also allows for long-term caching. Once a block has been uploaded or downloaded, it can be reused for other files that contain the same data. Over time, this builds an internal "library" of reusable content. That means that as more people use the Hub, performance improves for individuals and the whole community.

The Hub is where thousands of models and datasets are stored, shared, and versioned. Each model update, dataset tweak, or experiment could involve moving hundreds of megabytes, if not gigabytes, back and forth. Speed matters.

With blocks, uploading a modified model version is faster because only the new or changed blocks are uploaded. This can turn a 500MB upload into just a few megabytes. Similarly, downloading models is quicker, especially when users already have previous versions cached locally. They just pull the new parts, and the system rebuilds the rest from what they already have.

The other big improvement comes from reliability. Interrupted downloads no longer mean starting over. Since the system tracks which blocks are already present, transfers can resume where they left off with precision. And because the hash of each block is verified, corrupted transfers are nearly eliminated.

Beyond Speed, this approach supports better collaboration. Teams working on different model branches often share large amounts of base data. Block-level deduplication makes this sharing lightweight and efficient without duplicating storage or bandwidth for anyone working in collaborative AI or data science, which makes the whole experience feel lighter and smoother.

The move from chunks to blocks isn’t just a backend upgrade—it’s a shift in how data is handled at scale. It brings performance improvements, yes, but it also reshapes what's possible when working with large files on the Hub. Syncing becomes faster, collaboration becomes easier, and storage becomes more efficient.

The broader implication is that this method allows more dynamic data sharing. You could see faster syncing for mobile or edge devices, quicker deployment times for fine-tuned models, or even easier management of large datasets that change frequently. As more platforms adopt block-based handling, users will likely expect this Speed and flexibility as a baseline.

It also reinforces a larger pattern in software infrastructure—systems getting smarter about redundancy, caching, and partial updates. Whether it’s models, datasets, or full projects, efficiently reusing and repurposing data without re-transmitting it is key for scaling AI development.

Switching from chunks to blocks noticeably improves file handling on the Hub. Uploads are quicker, downloads are more efficient, and collaboration becomes smoother. By avoiding redundant transfers and using intelligent caching, the system adapts to the needs of modern workflows. This shift isn’t just technical—it directly improves how users interact with large models and datasets. It’s a clear step forward in making file management faster, smarter, and more responsive for everyone working with AI and data.

Advertisement

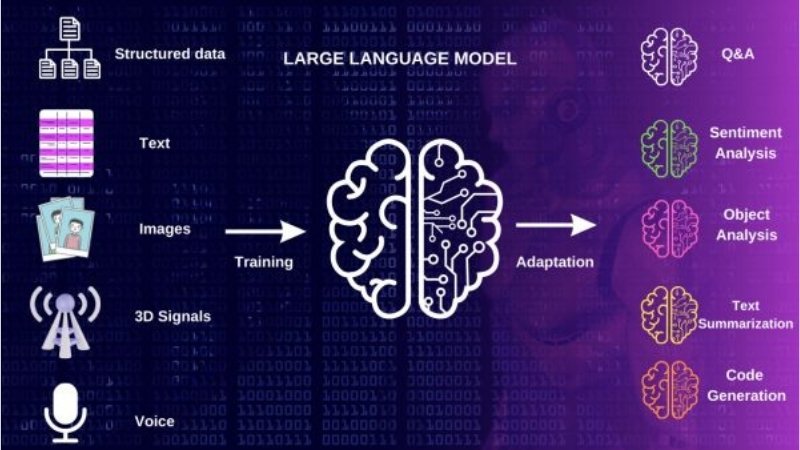

Multilingual LLM built with synthetic data to enhance AI language understanding and global communication

Which data science startups are changing how industries use AI? These ten U.S.-based teams are solving hard problems with smart tools—and building real momentum

How to convert Python dictionary to JSON using practical methods including json.dumps(), custom encoders, and third-party libraries. Simple and reliable techniques for everyday coding tasks

Want to run AI models on your laptop without a GPU? GGML is a lightweight C library for efficient CPU inference with quantized models, enabling LLaMA, Mistral, and more to run on low-end devices

AI agents aren't just following commands—they're making decisions, learning from outcomes, and changing how work gets done across industries. Here's what that means for the future

Discover 10 job types AI might replace by 2025. Explore risks, trends, and how to adapt in this complete workforce guide.

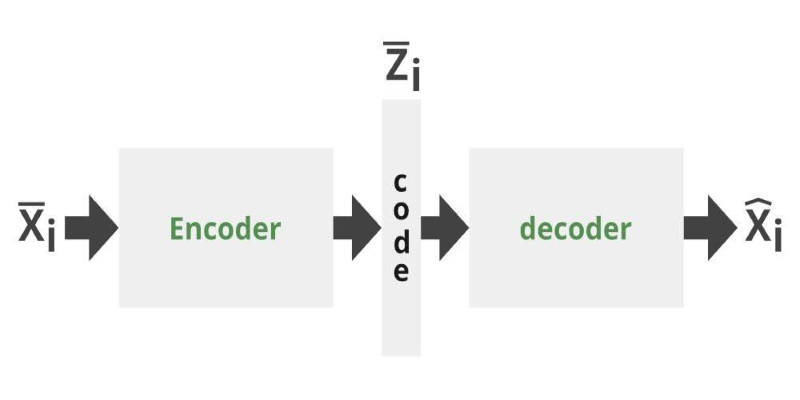

Can you get the best of both GANs and autoencoders? Adversarial Autoencoders combine structure and realism to compress, generate, and learn more effectively

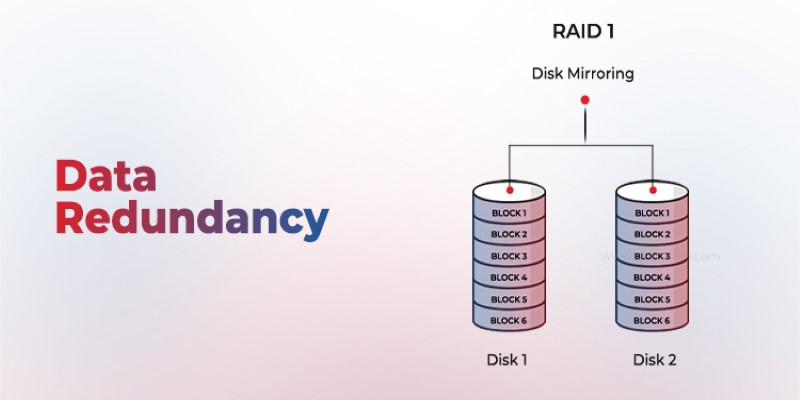

Is your system storing the same data more than once? Data redundancy can protect or complicate depending on how it's handled—learn when it helps and when it hurts

Curious about the evolution of Python? Learn what is the difference between Python 2 and Python 3, including syntax, performance, and long-term support

Curious about data science vs software engineer: which is a better career? Explore job roles, skills, salaries, and work culture to help choose the right path in 2025

GenAI is proving valuable across industries, but real-world use cases still expose persistent technical and ethical challenges

GenAI is proving valuable across industries, but real-world use cases still expose persistent technical and ethical challenges