Advertisement

Working with CSV files often feels like a balancing act between flexibility and frustration. They're simple, readable, and widely used, but they lack the structure of a real database. On the other hand, SQL is structured and powerful, but it is traditionally tied to servers or database systems.

What many people don’t realize is how much you can get done by combining both. Whether you're managing data for a small business, testing models, or preparing datasets, learning how to use SQL with CSVs can save time and open up new ways to handle data without needing a full database setup.

It might surprise some people, but you don’t need a massive database server to query data using SQL. Many tools and libraries allow you to run SQL queries directly against CSV files. For instance, Python's pandasql and sqlite3 libraries can load CSVs into memory and let you write SQL queries without any extra configuration. Another useful tool is DuckDB, which works with CSVs natively and supports fast SQL queries. This setup removes the need for schema files or lengthy imports—just point to the file, define the column types if needed, and you’re ready.

Working with SQL queries on CSVs makes filtering, joining, grouping, and aggregating much easier than trying to do it all manually in Excel or even plain Python. Rather than chaining a bunch of operations, you can write a single query like:

SELECT product, SUM(quantity) AS total_sold

FROM sales_data

WHERE sale_date >= '2024-01-01'

GROUP BY product

ORDER BY total_sold DESC;

This pulls out exactly what you need. No loops, no sorting functions—just one line of clear instructions. SQL also helps create repeatable queries. Therefore, instead of rewriting the logic, you can store these queries and run them whenever the file is updated.

When working with raw CSV files, the biggest challenge is usually cleaning the data. Inconsistent column names, missing values, extra whitespace, and strange formatting can cause big issues. Using SQL on top of CSVs allows you to handle these problems efficiently. You can use TRIM(), COALESCE(), and other SQL functions to manage bad data on the fly.

Say you have a CSV where the email field might be missing or inconsistent. You can write something like:

SELECT TRIM(LOWER(email)) AS cleaned_email

FROM users

WHERE email IS NOT NULL AND email LIKE '%@%';

This ensures the email values are in lowercase, extra spaces are removed, and only valid-looking entries are selected. This beats trying to write custom functions in Python or doing endless find-replace in spreadsheets. When you're cleaning a big dataset, these SQL techniques save a lot of time and make your process easier to reproduce.

Another common case is handling duplicates. With a simple SQL query, you can count repeated entries or even extract unique rows:

SELECT DISTINCT *

FROM customers;

Or for spotting duplicates:

SELECT name, COUNT(*) AS count

FROM customers

GROUP BY name

HAVING count > 1;

This type of quick analysis helps catch problems early, especially before importing the data somewhere else.

A common issue in real-world data work is that different teams or systems generate separate CSV files that need to be merged. This usually means matching keys across multiple files—something SQL is good at. You can treat multiple CSVs as separate tables and perform joins just like in a traditional database. Whether you're matching product IDs to sales records or aligning time-series data across logs, SQL handles this more cleanly than writing nested loops or if-else chains.

Here’s an example of how a join might work between two CSVs: products.csv and sales.csv.

SELECT p.product_name, SUM(s.quantity) AS total_sold

FROM sales s

JOIN products p ON s.product_id = p.id

GROUP BY p.product_name;

The result is a clean summary of product sales using proper names instead of cryptic IDs. Using SQL with CSVs this way gives structure to otherwise flat files, allowing you to create reports, summaries, and filtered views that would be difficult with spreadsheets alone.

Another great use of joins is dealing with metadata. If you have a main CSV of transactions and a separate one that maps store IDs to locations, a join lets you pull in those location details easily. This makes it much easier to prepare data for dashboards or send summaries by region or store.

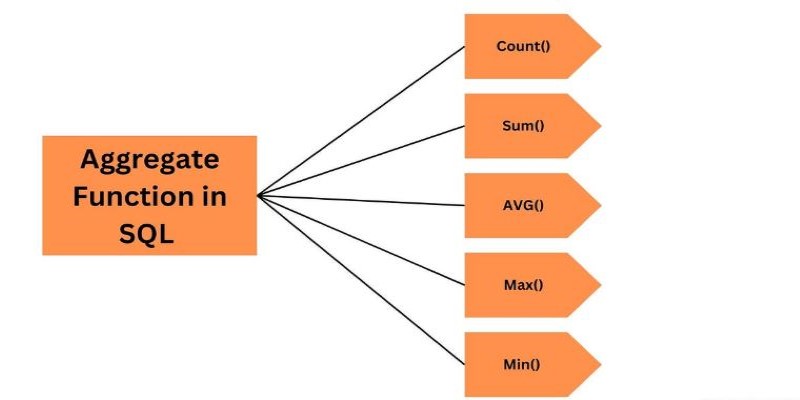

Once your data is loaded, cleaned, and combined, analysis becomes much more efficient using SQL. With just a few lines, you can compute statistics, identify trends, or prepare the dataset for machine learning tasks. SQL provides aggregate functions, such as SUM(), AVG(), COUNT(), MIN(), and MAX(), which enable you to quickly summarize large datasets.

Say you want to find the average order value per customer:

SELECT customer_id, AVG(order_total) AS avg_order_value

FROM orders

GROUP BY customer_id;

That would take many steps in a spreadsheet or a full script in most programming languages. SQL gives a direct path.

Filtering is just as clean. If you want only high-value customers:

SELECT customer_id

FROM orders

GROUP BY customer_id

HAVING SUM(order_total) > 1000;

It's readable, logical, and adaptable. You can easily tweak it and run it again as new data becomes available.

If you're using a tool like SQLite, DuckDB, or even Excel with a plugin, you can store these queries and use them repeatedly. This is ideal for situations where data is updated weekly or monthly, but the analysis questions remain the same.

SQL with CSVs makes it easy to extract quick insights. Filter by date and use LIMIT for top results, or prepare clean datasets for regression by selecting specific columns, applying filters, and exporting the refined data to a new file.

SQL doesn’t require writing functions or maintaining long scripts. Once you understand the syntax, the same logic applies across different datasets and tools.

Using SQL with CSV files turns plain data into something more structured and usable. It removes the need for complicated scripts or endless spreadsheet steps. SQL lets you clean, join, and analyze data with clear and reusable queries. Tools like SQLite and DuckDB work without heavy setup, making it simple to run SQL directly on your files. This approach saves time and helps you handle data more efficiently on your terms.

Advertisement

Curious about GPT-5? Here’s what to know about the expected launch date, key improvements, and what the next GPT model might bring

Which data science startups are changing how industries use AI? These ten U.S.-based teams are solving hard problems with smart tools—and building real momentum

Curious about data science vs software engineer: which is a better career? Explore job roles, skills, salaries, and work culture to help choose the right path in 2025

What happens when transformer models run faster right in your browser? Transformers.js v3 now supports WebGPU, vision models, and simpler APIs for real ML use

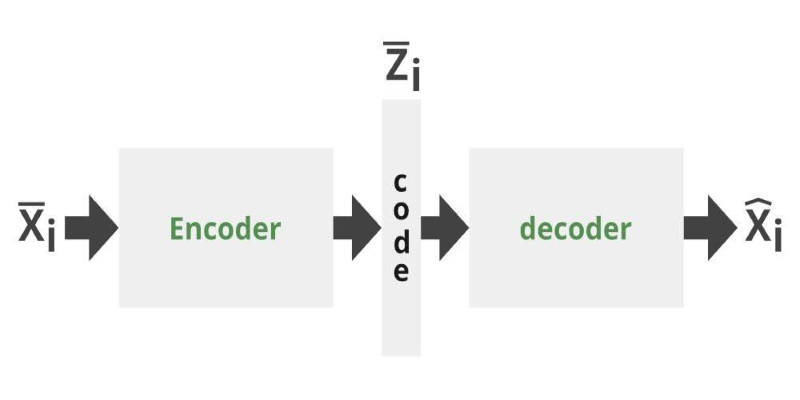

Can you get the best of both GANs and autoencoders? Adversarial Autoencoders combine structure and realism to compress, generate, and learn more effectively

How the Hugging Face embedding container simplifies text embedding tasks on Amazon SageMaker. Boost efficiency with fast, scalable, and easy-to-deploy NLP models

XAI’s Grok-3 AI model spotlights key issues of openness and transparency in AI, emphasizing ethical and societal impacts

Windows 12 introduces a new era of computing with AI built directly into the system. From smarter interfaces to on-device intelligence, see how Windows 12 is shaping the future of tech

How Python Tuple Methods and Operations work with real code examples. This guide explains tuple behavior, syntax, and use cases for clean, effective Python programming

See how Intelligent Process Automation helps businesses automate tasks, reduce errors, and enhance customer service.

Want to run AI models on your laptop without a GPU? GGML is a lightweight C library for efficient CPU inference with quantized models, enabling LLaMA, Mistral, and more to run on low-end devices

A step-by-step guide on how to use Midjourney AI for generating high-quality images through prompts on Discord. Covers setup, subscription, commands, and tips for better results